Charles Nichols – Virginia Tech University

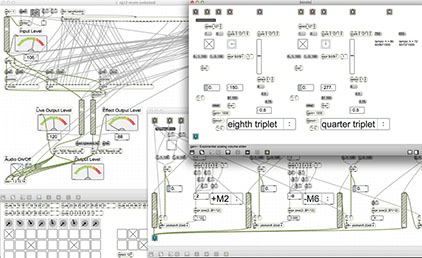

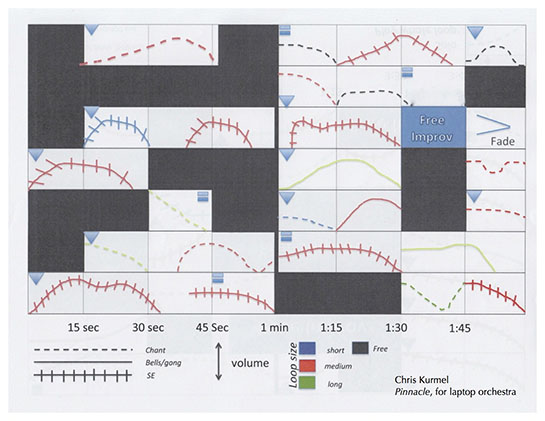

Students will learn how to program interactive computer music, using the Max visual programming environment, creating patches that sequence MIDI, and record, synthesize, and process audio. Using Kinect, Wii, Leap, nanoKontrol, and pedal computer music controllers, students will manipulate MIDI data and control audio synthesis and digital effects parameters. Students will compose structured improvisations, to perform as a laptop ensemble, scored with graphic notation, that detail their performance gestures.

Class will meet for morning and afternoon lectures and lab sessions. In the lectures, students will explore example patches, learning about Max programming and computer music theory. Programming example and computer music theory topics include controller IO, GUI display, MIDI sequencing, event timing, audio synthesis, digital effects processing, and spatialization. Scores, patches, and recordings of interactive computer music pieces will be studied and discussed in class, and graphic notation systems will be presented. In labs, students will further study the example patches, work through tutorials, program their projects, compose their structured improvisations, notate their scores, and rehearse the compositions. Structured improvisations will be workshopped as a group, to develop compositional ideas.

Monday, June 16

14:00 – 17:00: Session 1: Max data input and output

Tuesday, June 17

09:00 – 12:00: Session 2: Max audio recording and processing

14:00 – 17:00: Session 3: structured improvisation composition and graphic notation

Wednesday, June 18

09:00 – 12:00: Session 4: Max data storage and timing

14:00 – 17:00: Session 5: structured improvisation rehearsal

Thursday, June 19

09:00 – 12:00: Session 6: Max audio synthesis and spatialization

Session 1: Max data input and output

In the first session, participants will explore inputting data from computer music controllers, like the Kinect sensor, Wii Remote and Nunchuk, Leap Motion, Korg nanoKontrol, DigiTech Control8 pedal board, game controllers, joysticks, and the iPhone running apps like c74. MIDI and OSC data from these controllers will be displayed with GUI objects, and used to control VST plugin parameters for digital audio synthesis and signal processing.

Session 2: Max audio recording and processing

During the second session, participants will input audio through microphones or electric instruments, to record, loop, and process with digital effects, like amplitude modulation, frequency modulation, waveshaping, convolution, pitch shifting, harmonizing, filtering, delays, chorus, flanger, and phasing.

Session 3: structured improvisation composition and graphic notation

For the third session, participants will workshop compositional ideas for structured improvisations, to be performed as a laptop ensemble, notating performance gesture and musical parameters like entrances, loudness, and effects parameters, with lines, shapes, colors, and textures, over a timeline.

Session 4: Max data storage and timing

During the fourth session, participants will explore different data storage, timing, and ordering objects, math on data streams, and how to generate automation of synthesis and effects parameters with lines, envelopes, and low frequency oscillators.

Session 5: structured improvisation rehearsal

For the fifth session, the laptop ensemble will rehearse the structured improvisations composed by the participants, scored with graphic notation, and revise their patches and pieces.

Session 6: Max audio synthesis and spatialization

In the sixth and final session, participants will work with oscillator objects to synthesize various waveforms, and look at different ways to spatialize audio in surround sound.