Giovanni Costantini1,2, Massimiliano Todisco1,3, Massimo Carota1

1 Department of Electronic Engineering, University of Rome “Tor Vergata”, Rome, Italy

2 IDASC Institute of Acoustics and Sensors “Orso Mario Corbino”, Rome, Italy

3 Fondazione “Ugo Bordoni”, Rome, Italy

Traditional musical sound is a direct result of the interaction between a performer and a musical instrument, based on complex phenomena, such as creativeness, feeling, skill, muscular and nervous system actions, movement of the limbs, all of them being the foundation of musical expressivity.

The way music is composed and performed changes dramatically when, to control the synthesis parameters of a sound generator, we use human-computer interfaces, such as mouse, keyboard, touch screen or input devices such as kinematic and electromagnetic sensors, or gestural control interfaces. As regards musical expressivity, it is important to define how to map few input data onto a lot of synthesis parameters. At present, it is obvious that the simple one-to-one mapping laws regarding traditional acoustical instruments leave room to a wide range of mapping strategies.

To investigate the influence that mapping has on musical expression, let us consider some aspects of Information Theory and Perception Theory:

- the quality of a message, in terms of the information it conveys, increases with its originality, that is with its unpredictability;

- information is not the same as the meaning it conveys: a maximum information message doesn’t make sense, if any listener that’s able to decode it doesn’t exist.

A perceptual paradox illustrating how an analytic model fails in predicting what we perceive from what our senses transduce is the following: both maximum predictability and maximum unpredictability of a musical message, imply minimum musical information or inexpressive musical information, then we can say that musical expressive message is a time variant information which moves between maximum predictability and minimum predictability.

A Neural Network approach is chosen to exceed the perceptual limits above mentioned.

Interface and Mapping Strategies

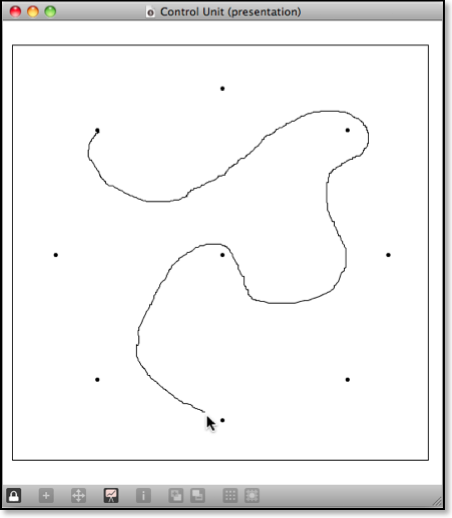

The interface has been developed by using the Cycling74 Max/MSP environment. It is constituted by three components: the control unit, the mapping unit and the synthesizer unit. The control unit allows the performer to control two parameters. Fig. 1 show the Max/MSP patch that constitutes the control unit. Particularly, performer draws lines and curves in a bi-dimensional box, by clicking and moving mouse inside the patch itself.

The control unit patch is constituted by the lcd Max/MSP object that returns x, y mouse space coordinates.

The evident points in the Fig. 1 represent the input/output patterns of the training set.

In detail, the control parameters x and y don’t influence directly the parameters that rule the behavior of the sound generators, but they are pre-processed by the mapping algorithm.

The implementation of musical expressivity is accomplished once we define the correspondence between the two x, y control parameters and the m synthesis parameters, that is to say, once we define the right mapping.

The chosen mapping strategies, by means of which the synthesis parameters are controlled, all influence the way the musician approaches the composition process.

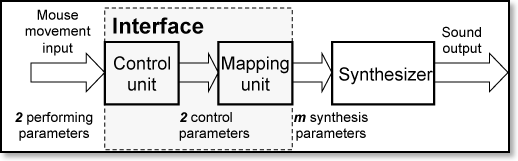

In Fig. 2, the structure of the virtual musical instrument is shown.

Fig. 2: The virtual musical instrument

Let’s assume the following concepts:

1. a predictable musical message be associated to an a priori known functional relation between the surface R2 and the hyperspaces Rm, that is to say, between the set of all the 2 inputs and the set of all the m synthesis parameters;

2. an unpredictable musical message be associated to a non linear and a priori unknown correspondence between R2 and Rm.

A composer can easily follow the above assumptions by making use of a Neural Network trained as follows:

1. he fixes a point in the 2-dimensional x, y space and he links it to a desired configuration of the m synthesis parameters;

2. he repeats D times step 1., so as to have D 2-to-m examples at his disposal; they constitute the training set for the mapping unit;

3. he chooses the neural network structure, that is to say the number of hidden neurons to use; then, he trains the neural network.

4. he explores the 2-dimensional x, y input space by moving through known and unknown points, with the aim of composing his piece of music.

Download

nn2js.zip is a MATLAB code that implement the Mapping unit in Max/MSP environment. In particular, starting from input and target output, it trains a Feed-Forward Neural Network (you need the Neural Network Toolbox for MATLAB) and gives a JavaScript code suitable for the js object. It can also work with more than two inputs!

References

- G. Costantini, G. Saggio, M. Todisco, A Glove Based Adaptive Sensor Interface for Live Musical Performances, 1st SENSORDEVICES International Conference on Sensor Device Technologies and Applications, Venice, Italy, July 18-25, 2010.

- G. Costantini, M. Todisco, G. Saggio, A Cybernetic Glove to Control a Real Time Granular Sound Synthesis Process, IMCIC International Multi-Conference on Complexity, Informatics and Cybernetics, Orlando, Florida, USA, April 6-9, 2010.

- G. Costantini, M. Todisco, G. Saggio, A Wireless Glove to Perform Music in Real Time, 8th WSEAS International Conference on APPLIED ELECTROMAGNETICS, WIRELESS and OPTICAL COMMUNICATIONS, Penang, Malaysia, March 23-25, 2010.

- G. Costantini, M. Todisco, M. Carota, D. Casali, A New Physical Sensor Based on Neural Network for Musical Expressivity, Proceedings of the 13th AISEM Italian Conference Sensors and Microsystems, Rome, Italy, February 19-21, 2008, pp. 281-288.

- G. Costantini, M. Todisco, M. Carota, A Neural Network Based Interface to Real Time Control Musical Synthesis Processes, Proceedings of the 11th WSEAS International Conference on CIRCUITS, Agios Nikolaos, Crete Island, Greece, July 23-25, 2007, pp.41-45.

- G. Costantini, M. Todisco, M. Carota, G. Maccioni, D. Giansanti, A New Adaptive Sensor Interface for Composing and Performing Music in Real Time, Proc. of 2nd IEEE International Workshop on Advances in Sensors and Interfaces , 26-27 June 2007, Bari, Italy, pp.106-110.

- Bongers, B. 2000, Physical Interfaces in the Electronic Arts. Interaction Theory and Interfacing Techniques for Real-time Performance, In M. Wanderley and M. Battier, eds. Trends in Gestural Control of Music. Ircam – Centre Pompidou.

- Orio, N. 1999, A Model for Human-Computer Interaction Based on the Recognition of Musical Gestures, Proceedings of the 1999 IEEE International Conference on Systems, Man and Cybernetics, pp. 333-338.

- Abraham Moles, Information Theory and Aesthetic Perception, University Of Illinois Press (1969).

- Rudolf Arnheim, Entropy and Art: An Essay on Disorder and Order, University of California Press (January 29, 1974).

- Hertz J., A. Krogh & R.G. Palmer, Introduction to the theory of neural computation, Addison-Wesley Publishing Company, Reading Massachusetts, 1991.